Kernighan and Pike were right: Do one thing, and do it well

Extensible programs like Obsidian have achieved a Holy Grail of software architecture, after decades of failed attempts.

In October 1984 two ideologues published a radical manifesto… sort of. Program Design in the UNIX Environment, by comp-sci legends Brian Kernighan and Rob Pike, articulated a pattern for software architecture that both men had already spent years fighting to preserve.

Rob Pike and Brian Kernighan

Admittedly, as far as manifestos go, it’s about as spicy as you’d expect from two Canadian engineers. Its most pointed jab is this memorable line from the abstract:

Old programs have become encrusted with dubious features.

The crux of the paper is often summed up by the acronym DOTADIW, “Do One Thing And Do It Well”. Unix and its descendants are full of programs that embody this mantra: ls just lists files, cat just outputs file contents, grep just filters data, wc just counts words, etc. Each program has a few options that change its behavior, but not too many. For example: wc can be configured to count lines or words, but it can’t count the number of paragraphs or the occurrences of a specific phrase.

The power of Unix, as championed by Kernighan and Pike, was the ability to chain these simple programs together to create complex behaviors. Why add regex matching to wc when grep already does that? To count the number of functions in a rust file you could run:

cat main.rs | grep "^\s*fn\s" | wc -l

Translated from Bash jargon to plain English: Read the file, filter it to just the lines containing functions (lines where the first non-whitespace text is fn), and then count those lines. The pipe operator (|) simply moves the output of one program into the input of the next.

This is a great idea! The simple programs that comprise this command are easy to develop and maintain. In fact, they’re so simple that they might genuinely be free of bugs — a feat almost laughably implausible for any more complicated piece of software.

Unfortunately, as with most manifestos, this ideal doesn’t hold up to real-world scrutiny. Unix programs can only communicate in one direction, and only by sending streams of text. The model made some amount of sense in a terminal environment, but never successfully made the jump to desktop operating systems. So popular modern programs like Photoshop and Word are about as “encrusted with dubious features” as it’s possible to be. Kernighan and Pike’s beautiful idea never came to fruition.

Enshittification

Let’s assume Kernighan and Pike were right about at least one thing: that this software bloat is a problem. Massive apps are hard to learn, hard to use, and buggy as hell. Some of this is a UX problem, but most of it is actually a symptom of the developer experience.

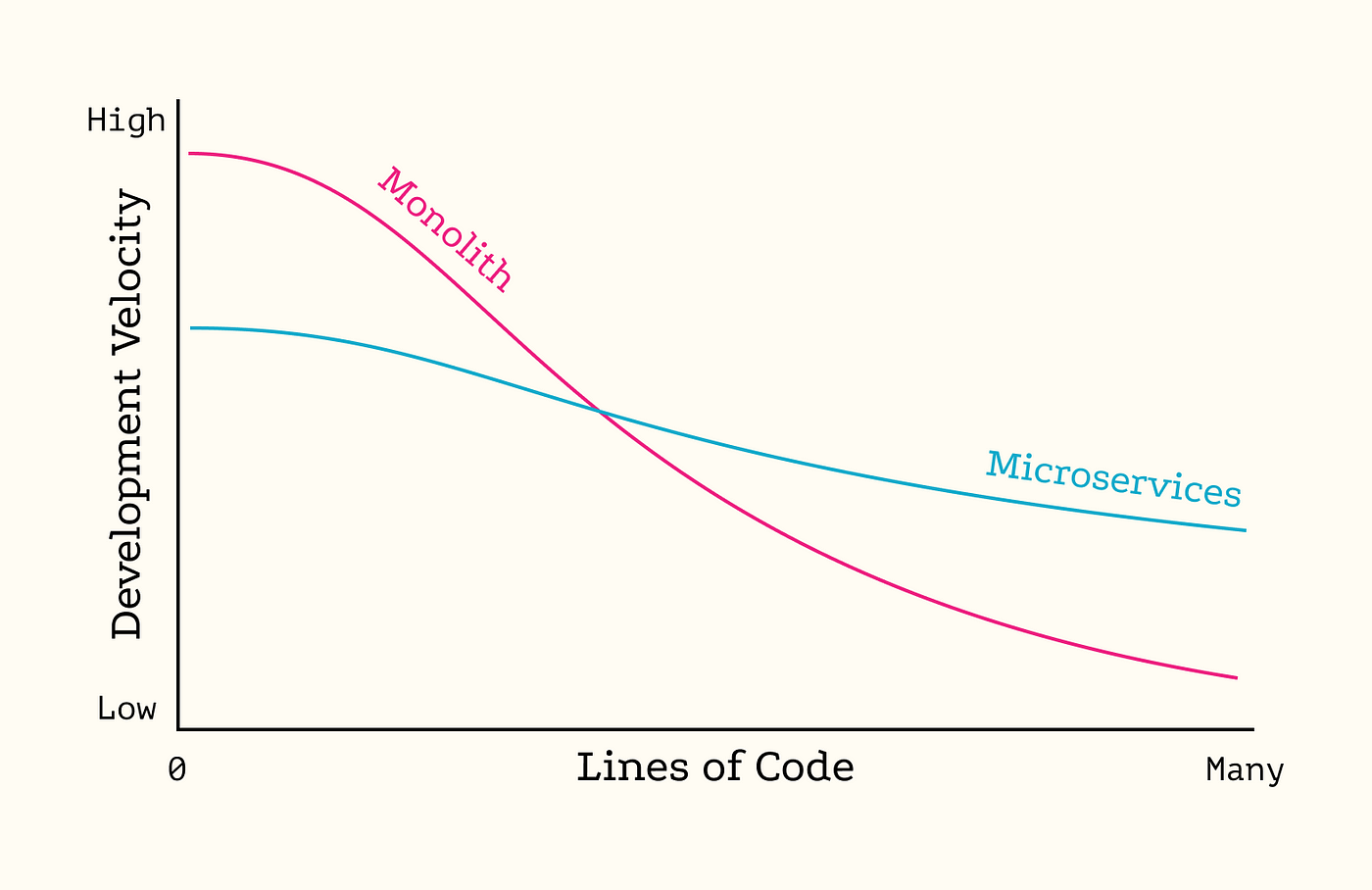

Big monolithic apps have large codebases that slow down development velocity. They’re slower to compile, harder to test, and full of dark corners where bugs can lurk and multiply. A bad change to one part of a codebase can cause headaches for an entire building’s worth of developers, tanking productivity for hours or days.

But, of course, users don’t really care about development velocity or codebase size. Unfortunately, those quantities are inextricably linked to the things users do care about: speed, price, features and, most importantly, reliability_._

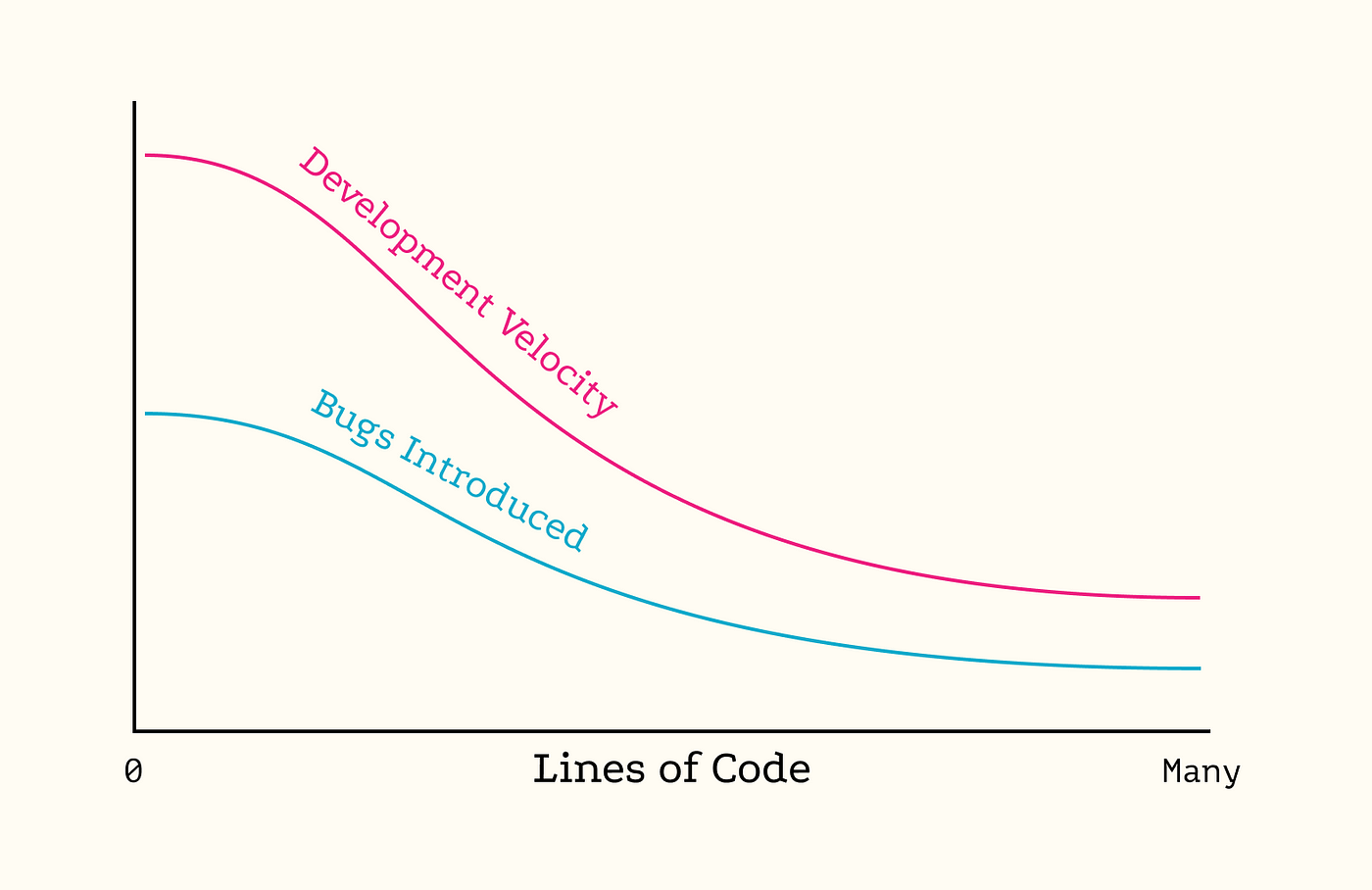

In general, the rate at which bugs are introduced is proportional to the development velocity. Each line of code has some probability of causing a bug — call it Pᵇᵘᵍ — so fewer lines added means fewer chances for bugs to spawn. As development velocity slows down the rate of new bugs should slow with it.

Unfortunately this relationship is not fully constant; there are two forces that skew the balance for the worse as the size of a codebase increases.

First, lower development velocity forces teams to make difficult trade-offs to deliver work in time, which often means compromising on quality. After all, management will be upset about a delayed feature but probably won’t even notice a delayed bug fix. So as development velocity decreases, bug-fix velocity inevitably decreases even more.

Second, Pᵇᵘᵍ increases as the codebase grows larger. I’ve written before about how the most pernicious bugs are structural, not algorithmic, ie. caused by two sub-systems interacting in unexpected ways, not by off-by-one errors or math mistakes. Therefore, as the number of sub-systems and modules increases, Pᵇᵘᵍ increases with it. At a large enough scale it’s likely that neither a commit’s author or its reviewers will understand the full context well enough to squash bugs before they’re submitted.

And, of course, the slower velocity and higher Pᵇᵘᵍ mean that fixing newly-added bugs is time-consuming and prone to introducing even more bugs.

Bugs Introduced is something like Velocity × percent of time spent on new features × Pᵇᵘᵍ

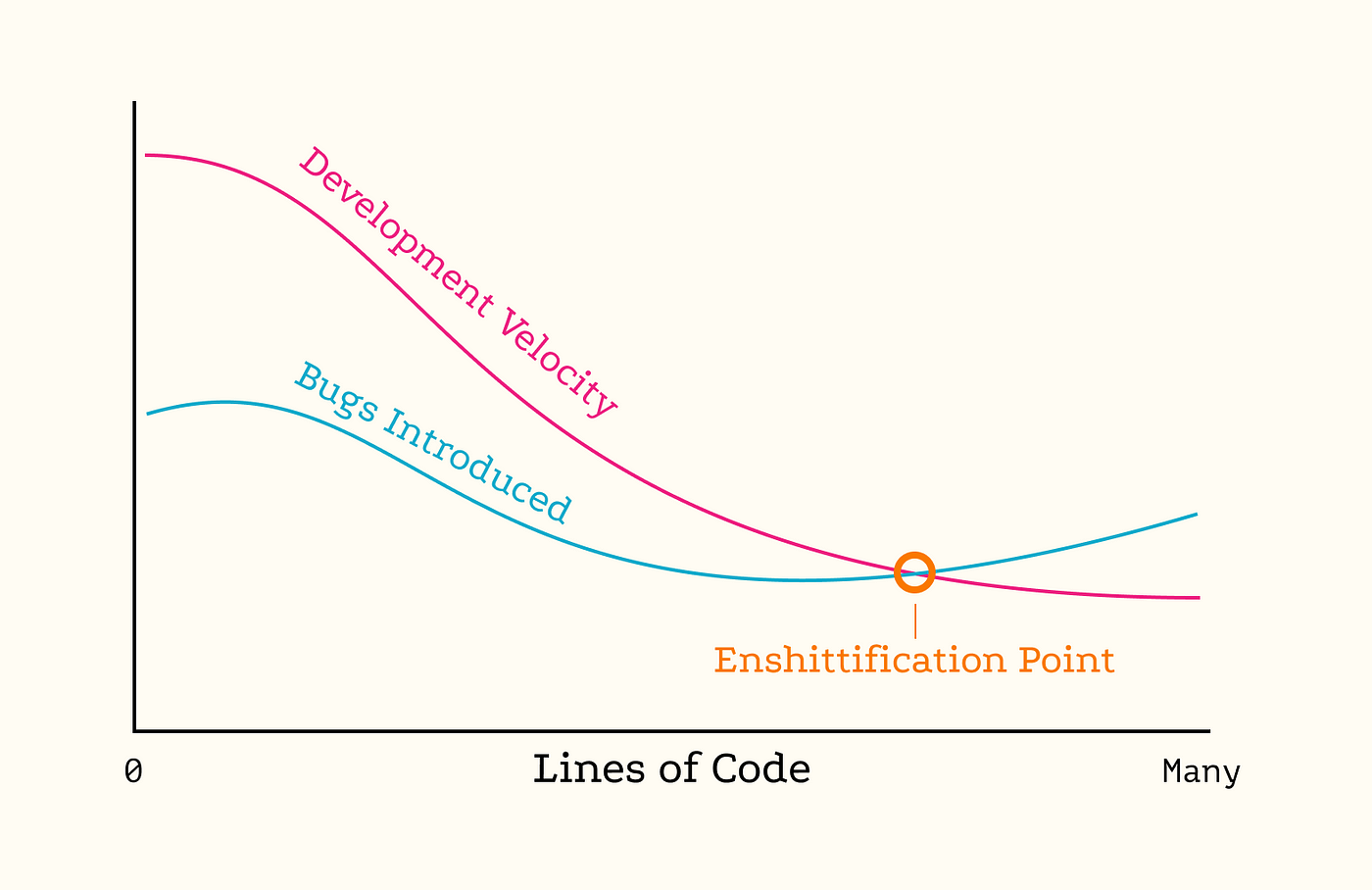

Large codebases will eventually reach an “enshittification point” — the point at which bugs are introduced faster than they can reasonably be fixed. The exact size at which this occurs varies from team-to-team and architecture-to-architecture, but even the most talented engineers will eventually encounter enshittification if their codebase is allowed to grow unchecked. Putting it all together: Large monolithic codebases produce shitty software.

But there’s still an open question: in practice, can smaller codebases produce software that’s any less shitty? Or do they come with problems of their own?

Microservices: DOTADIW at scale

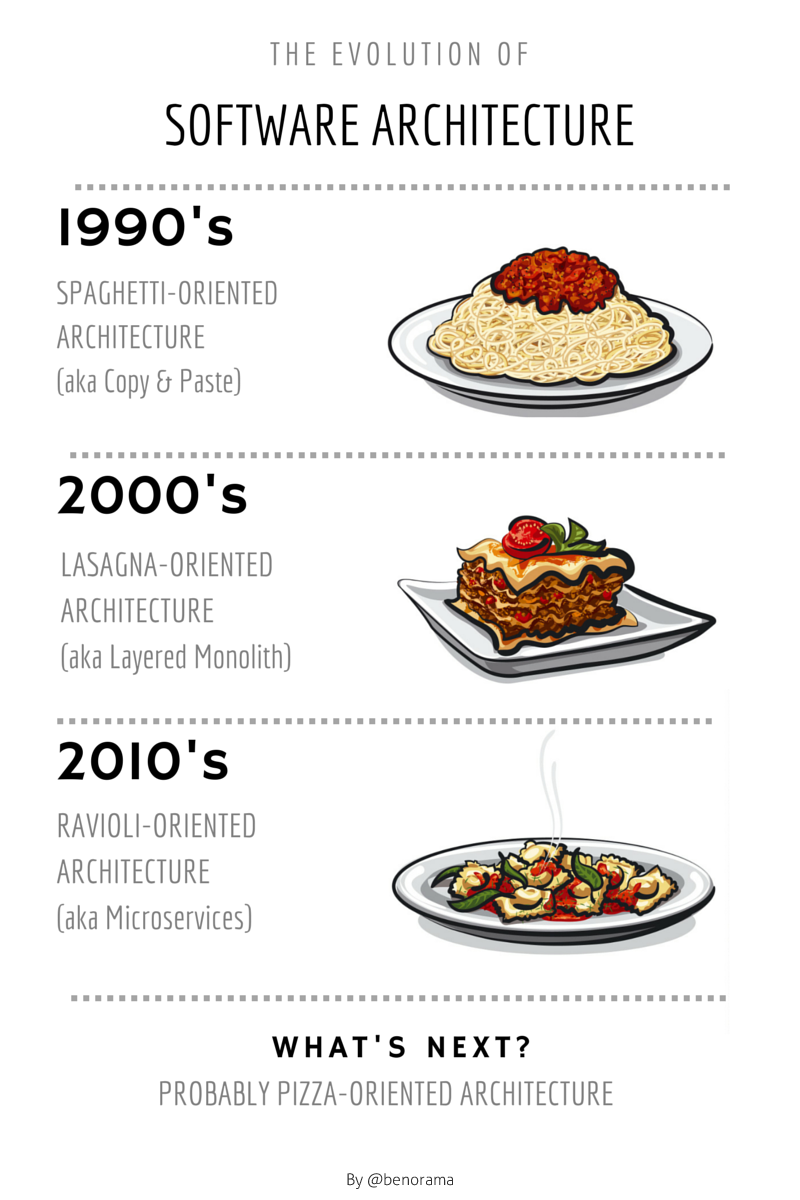

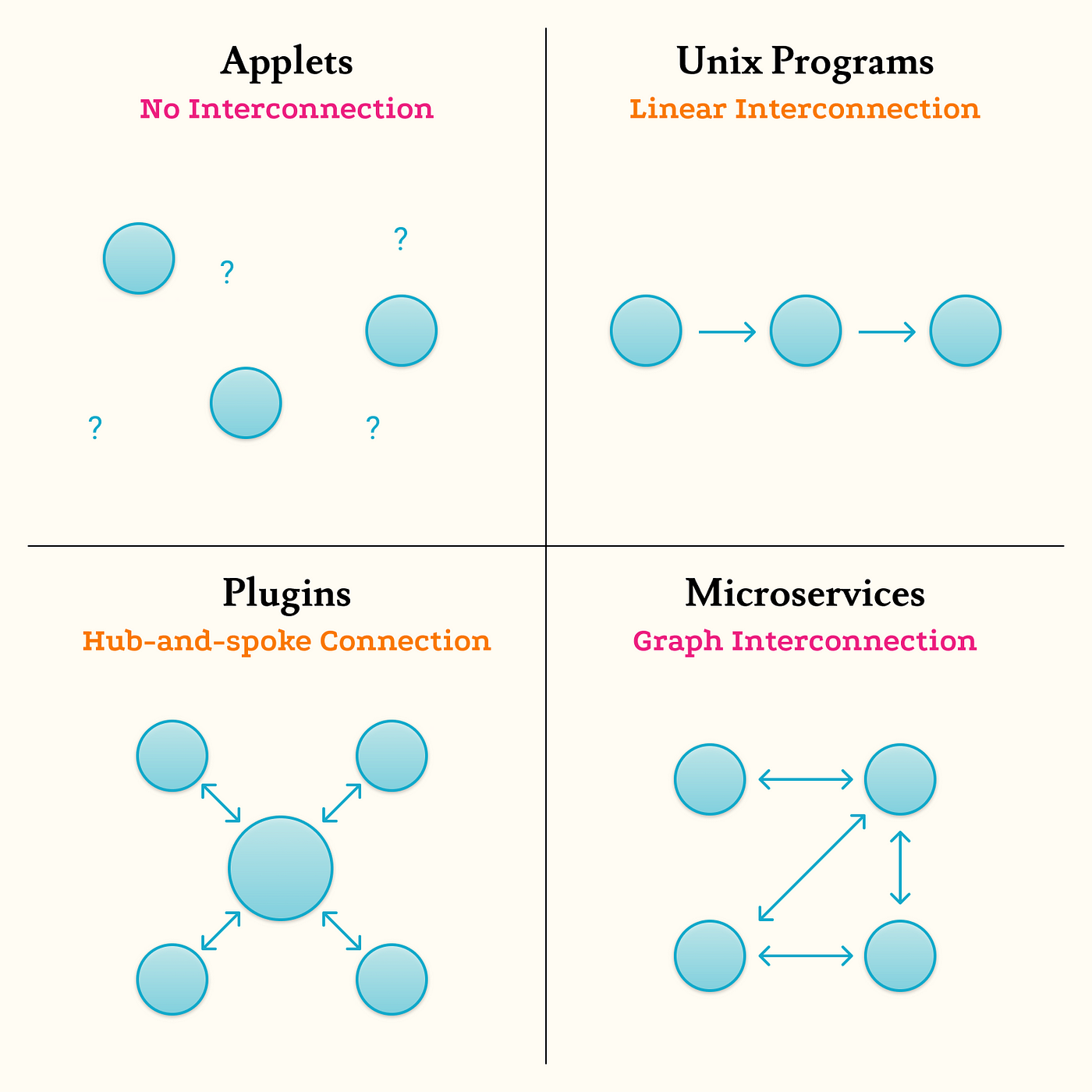

Source: The Evolution of Software Architecture (Benoit Hediard)

The merit, or toll, of the Microservices architecture is hotly debated. The basic idea is that large servers should be split up into discreet pieces that are each responsible for one aspect of a system. One server might handle authentication while another tracks user preferences and another runs spam detection. In other words: Microservices are meant to Do One Thing And Do It Well.

Much has been written about instances where the Microservices architecture works well and where it doesn’t. Here are some resources I found helpful:

- Amazon Prime Video’s Microservices Move Doesn’t Lead to a Monolith after All [The New Stack]

- When Microservices Are a Bad Idea [Tomas Fernandez]

- Breaking Up a Monolith: Kong Case Study [Marco Palladino]

The general pattern I’ve seen is this:

Writing microservices is hard. There’s just a lot of overhead involved in architecting a network of microservices, and a lot of boilerplate code necessary to get things configured and oriented. Microservices are only “worth it” once a project exceeds a certain size. At that point, their relatively fast compile times and well-codified sub-component interactions increase development velocity and decrease Pᵇᵘᵍ, allowing codebases to grow extremely large without (necessarily) enshittifying.

On the other hand, some projects genuinely work better as monoliths. There is a performance penalty to using microservices. That may or may not be an important factor depending on the characteristics of a particular project. There’s a reason software architects are paid so well: these decisions involve countless factors and tradeoffs that could easily cost, or save, millions of dollars in the long term.

At the very least, Microservices are a great tool for a server developer to keep in their back pocket. Having more tools at ones disposal is always better than having fewer. I’m largely a frontend developer, so I’m getting jealous! What axe can I wield against the forces of enshittification?

Applets?

Splitting services into small pieces yields microservices, so splitting frontends into small pieces obviously yields “microfrontends”. But microfrontends are really just a modern rebranding of an old idea: applets.

A typical Java Applet from the early 2000s

“Applet” is the diminutive form of the word App, like “Booklet” to Book or “Piglet” to Pig. It’s a pithy word for a small program with narrow goals, one that has not become encrusted with dubious features.

Unfortunately it seems most applet creators stopped reading half way through Kernighan and Pike’s mantra: though applets Did One Thing, they rarely ever Did It Well. The word applet is usually synonymous with Java, though we could reasonably include ActiveX and Adobe Flash under that umbrella as well. If you’re too young to remember a time when random parts of websites were broken because some plugin or another was “too old” or “too new” or “not supported on your OS”… I don’t know, congratulations? You didn’t miss anything good.

More importantly, applets were almost completely stand-alone. There was no way to chain multiple applets together into a workflow, a la Unix Commands. Even their interactions with the OS and File System were necessarily limited in the name of security. They were “small apps” in the way that ride-on toy Range Rovers are “compact cars”: fun, but useless.

So maybe it’s time to throw “Do One Thing And Do It Well” for frontends into the pile of nice-but-unworkable ideas alongside zeppelins and communism. The Unix terminal didn’t exactly take the world by storm, nor did applets. Maybe enshittification is just a fact of life for frontend engineers, a fundamental consequence of entropy, a gradual decay akin to our own aging bodies.

Or maybe applets just did it wrong.

Plugins!

I recently wrote about the old standard-bearer for desktop software: native Mac apps. Specifically, I wrote about how they faded into irrelevance. A big reason for this is that smartphones totally cannibalized the “personal” side of Personal Computing. To borrow an analogy from Steve Jobs, laptops and desktops went from being fun family sedans to being work-horse trucks.

A better analogy is to a tractor. A truck has only one job: take stuff from here and move it over there. Modern tractors can be configured to serve dozens of purposes: they can till the ground, sow seeds, plow snow, dig holes, etc. More specifically, they provide a common platform that other specialized tools literally “plug in” to.

A tractor with all the fixins

This is a common design pattern for industrial equipment. Digital cinema cameras are skeletal rigs of filmmaking hardware build around an image sensor. Robotic arms can be configured with dozens of different tools for manufacturing. Some buildings now are constructed by assembling pre-fabricated parts atop a standard foundation.

To fill their new steel-toed shoes, the devices formerly known as Personal Computers will need to adopt this industrial mindset. The same goes for the software that runs on them. That’s not to say software can’t still be fun and user-friendly, but above all it must be flexible. Apps can either grow to encompass every possible feature and workflow or they can evolve into extensible platforms where users bolt on new components as needed.

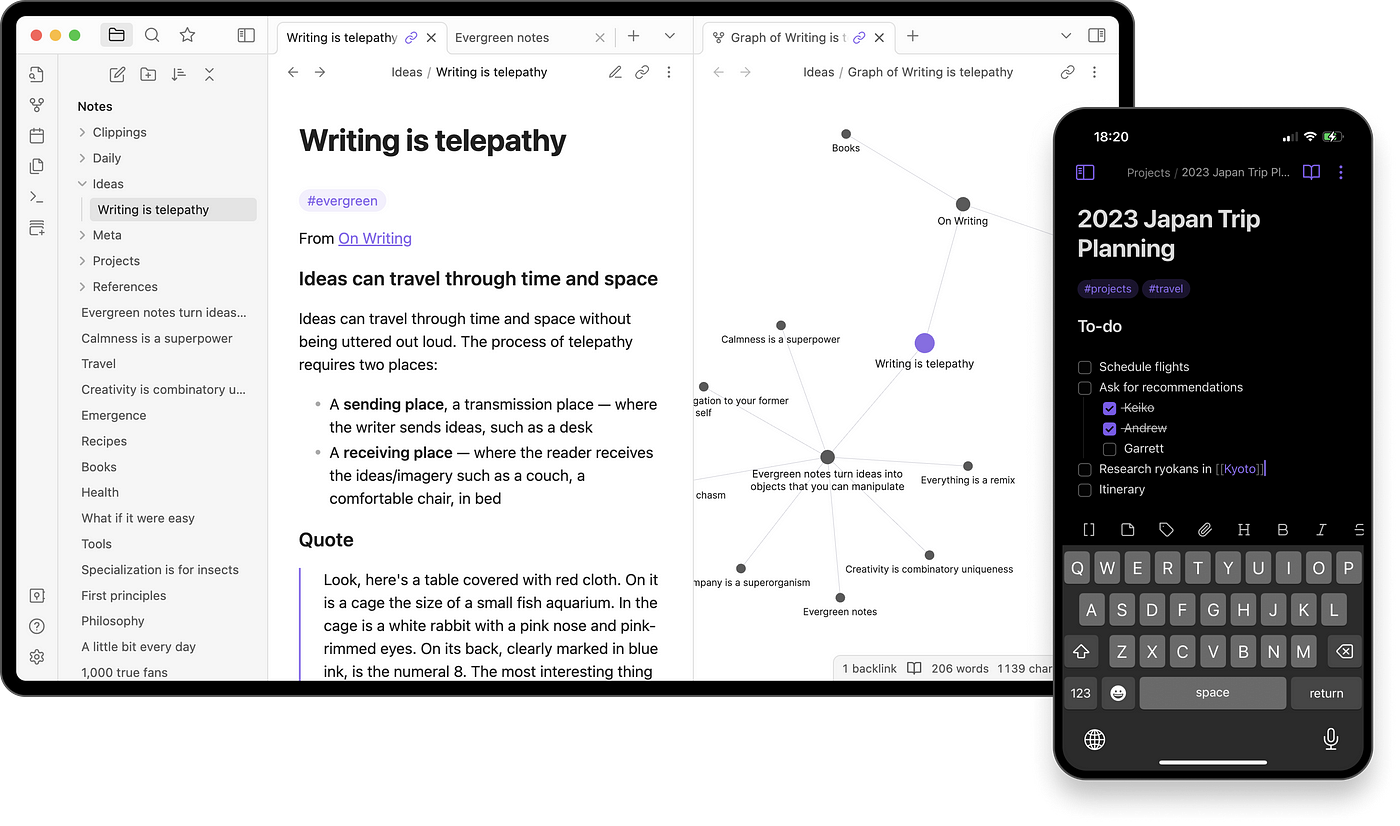

The best recent example of this is the writing app Obsidian. On the surface it may not look much like a piece of heavy machinery. In fact, it’s rather cute.

Obsidian 1.4 running on macOS and iOS

At its core, Obsidian is a markdown editor. It does that well. But its real magic is its plugin ecosystem. Obsidian plugins aren’t confined to sidebars or menu items, they can add new functionality to the ‘canvas’ where content is edited and consumed. People have written plugins that embed code, generate indexes, and even integrate LLMs directly into common writing workflows. The Obsidian subreddit almost feels like a community of truckers showing off their rigs. It’s software for tinkerers.

A good extensible desktop app should embrace being an “operating system” of sorts, but one built around a specific kind of canvas. VS Code is a canvas for programming projects, Blender is a canvas for 3D environments, Figma is a canvas for UI design. Each app has solid editing features built-in, but each are elevated by rich plugin ecosystems that bring new functionality directly into the canvas.

This is easier said than done. Keeping plugins from stepping on each-others toes when they all share access to a central canvas is a bit like herding cats. It’s a hugely challenging API design problem — but at least it’s just the one. Unlike microservices, plugins don’t involve complex networks of components each with their own interconnections. Everything is organized around a central hub that can enforce some degree of order.

Ideally that central hub should be as small as possible, like an operating system kernel. In Obsidian even core features of the app, like the file browser and the search bar, are implemented as plugins. Users directly benefit from the customizability this offers (don’t like the file browser? Just replace it!), and indirectly benefit from the high development velocity it enables. Obsidian is a fully-fleshed-out piece of software built by 7 humans and a cat. In my experience, it’s remarkably reliable and quickly improving.

Obsidian doesn’t Do One Thing and Do It Well. Rather it has pulled off the miraculous feat of Doing Many Things and Doing Them Well. But that’s somewhat of a mirage — Obsidian isn’t one app, it’s more like a multi-cellular organism. Each part is a separate and specialized unit, as Kernighan and Pike intended. Together they create the sort of emergent behavior that was supposed to be possible with the Unix shell. In that sense, Obsidian and its ilk (like VS Code) have achieved a holy grail of software development.

So what’s the key? Why now? What is it about the plugin model that makes it work where other models have faltered?

Putting the pieces together

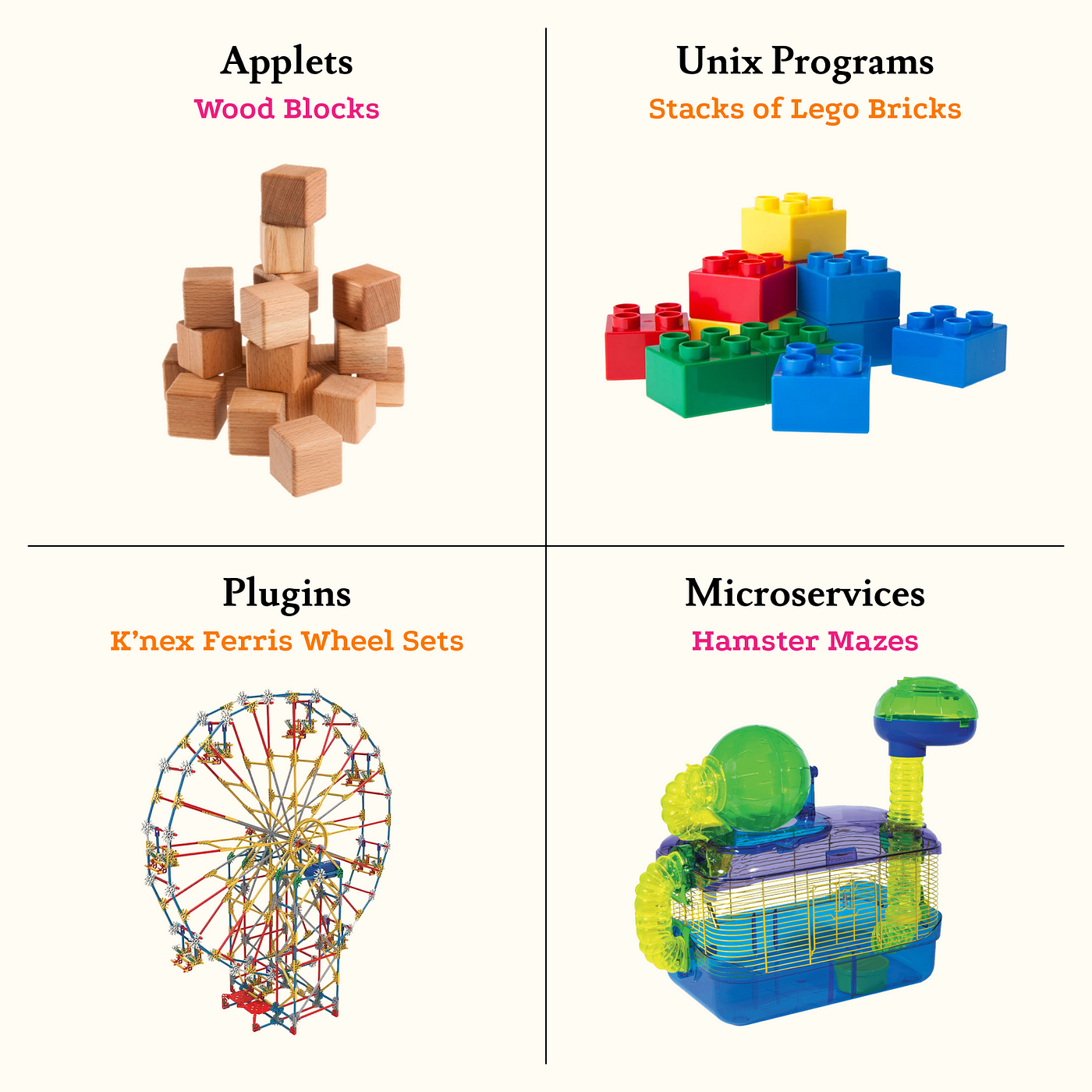

Tiny single-purpose pieces of software aren’t all that useful on their own, by definition. They only become useful when combined in ways that allow more complex behavior to emerge. Applets failed as a concept because they couldn’t be combined at all. The differences between other models largely come down to the specific way pieces are snapped together.

A good analogy is construction toys. A single Lego™ Brick (you’re welcome, pedants) is not much use, but it can snap together with other bricks to form beautiful structures. These software architectures work the same way.

Wood blocks don’t snap together at all. Lego bricks do, but only in one dimension — they can only form lines and planes. K’nex can snap together at angles around a central hub. And hamster mazes are just the wild west of sticking stuff together in haphazard ways.

Or, dropping the contrived analogy:

The hub-and-spoke model used for plugins works because it’s “Just Right” — flexible enough to create complex behaviors, but not so flexible that apps become impossible to debug or maintain. The model allows plugins to share a single canvas, and even indirectly communicate with each-other, while still forcing them to follow a consistent set of rules.

There’s still a place for microservices as well. The hub-and-spoke model could just as easily be called the Atomic Model — small independent electrons (plugins) orbiting around a solid nucleus. Apps, like atoms, can only hold so many pieces before they become unstable. The problem with Uranium isn’t that it’s poorly designed but simply that it’s bloated.

Microservices resemble another microscopic unit of matter, the molecule. Molecules can remain stable even with millions of pieces, but at the cost of complexity: Atomic physics has just a few concrete laws, while molecular chemistry is full of rules-of-thumb and guesswork.

As grandiose as it sounds to compare software engineering to quantum physics, I do think the two domains have a lot in common. All of nature, from the brightest star to the humblest potato, is comprised from units of simple matter. Every diverse aspects of reality emerges from some particular arrangement of those units. Similarly, a successful software architecture is one where single-purpose units of code are arranged into a synergistic whole.

A scientist may study a complex object under a microscope to observe its fundamental structure. An engineer has a similar task, only there’s no microscope. Instead, engineers must invent each atom and molecule, each system and subsystem, until they eventually build up to the desired result. It’s an impossible job to master. We all strive to do the best we can, and we all often fail.

There are no easy answers, but there are clues all around us. To determine the ideal structure for complex software, consider the structures that have survived a billion of years of cutthroat evolution: atoms and molecules, solar systems and galaxies, our own internal organs. Tractors and cameras. Obsidian and VS Code. Each are built of small single-purpose units. Just like Kernighan and Pike intended.